We live in a data-driven economy where everything we do or say impacts the digital stream of data that feeds right into how businesses decide their next move. Organizations partner with data analytics companies that collect large amounts of data about their customers, clients, and competitors, and then help draw actionable insights that streamline their business strategies. As data becomes the most valuable commodity in the world, the real measure of its worth lies in its authenticity and reliability. And reliability of data goes hand in hand with data integrity – a concept that is becoming increasingly relevant to modern-day businesses.

What is Data Integrity?

Data integrity is the assurance that data will be kept accurate and consistent throughout its life cycle. The safety of data for regulatory compliance and security is of supreme concern to any system that handles data. The more data-driven an organization is, the more it needs to focus on achieving and maintaining data integrity. One set of compromised data could easily throw a company’s goals off track and could leave a lasting impact.

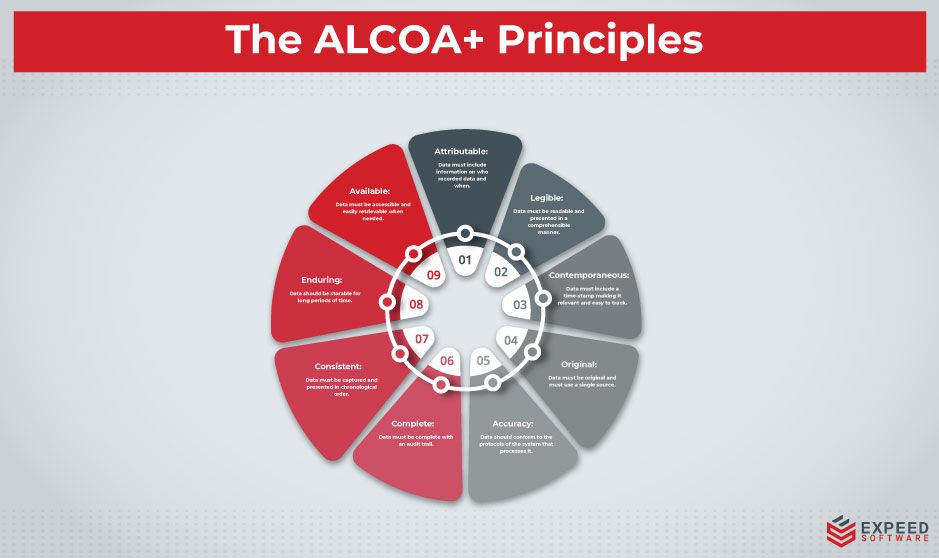

There are international standards and benchmarks for data integrity that data analytics companies follow globally. One such framework is the ALCOA+ by the US Food and Drug Administration (FDA). Originally introduced by the FDA as ALCOA for its own data regulation, the framework got accepted globally and was recently updated to ALCOA+ with four new principles. Let’s take a look at all nine principles of ALCOA+.

The original five

A – Attributable: All data must include information on who recorded it and when it was recorded.

L – Legible: The collected data must be readable and presented in a comprehensible manner. The legibility of the data extends to including the context under which the data was recorded. The data must not contain any unexplained symbols.

C – Contemporaneous: The data must be recorded at the time of the event. It essentially refers to including a time-stamp along with the data making it relevant and easy to track changes. It is important for companies to know how the data evolved through its lifecycle.

O – Original: All data collected must be original and must use a single source. Data should be preserved in an unaltered state any change should have a justifiable reason.

A – Accuracy: All collected data should conform to the protocols of the system that processes it. The data must correctly reflect the details of any event as it happened and must be error-free. For best accuracy, it is advisable to not make any edits to the data. If any edits are necessary, they must be properly documented and justified.

The additional four

Complete: The data collected must be complete with an audit trail that proves that no element has been deleted or lost.

Consistent: The data must be captured and presented in chronological order. There must be a date or time-stamp for every data point that matches the expected sequence of events.

Enduring: Data recorded should be storable for long periods of time. This principle emphasizes the need for safe storage of data for years or even decades after the actual event has taken place.

Available: Recorded data must be accessible and easily retrievable when needed. This principle emphasizes the need for categorizing and tagging data for easy access.

What are Different Types of Data Integrity?

Now that we understand the various principles involved in achieving data integrity, let’s look at the different types. Broadly speaking, we have two types of data integrity – physical and logical.

Physical data integrity:

It pertains to the physical storage of data. Companies must use high-quality hardware devices to store data. It also suggests that copies of data must be accessible to all stakeholders. Physical data integrity is often compromised by natural disasters like floods, earthquakes, power issues, and extreme weather situations.

Logical data integrity:

Logical integrity refers to data integrity when data resides in a database. Databases have numerous interrelated tables. Any changes to data in one table must not affect data in another table as data is used in different ways in a relational database. The idea is to protect data from human error. This type of integrity can be further classified into four types.

- Entity Integrity: This ensures that each data point recorded carries a unique value that identifies it. This unique identifier is called the primary key. For example, a database table of student information could have a student ID as the unique identifier for each student record. Thus the student ID becomes the primary key for this table.

- Referential Integrity: This encompasses the entire set of rules and guidelines that are enforced to keep the data in the database consistent and accurate. A series of processes are used to ensure that the data is stored and used uniformly. The concept of a foreign key is used for achieving this. The primary key of one table will be assigned as the foreign key of another table. For example, if table A contains student details and table B contains exam results, the primary key student ID from table A will act as the foreign key for table B. Thus foreign keys help ensure that data stays relevant and consistent between different tables.

- Domain Integrity: This ensures that each column only contains data that is appropriate for its domain. A domain is categorized by the format, type, and amount of data that is allowed. For instance, you would receive a validation error if you tried to enter a name under a column that was marked for Date of Birth. Every data system will have specific formats for its columns which need to be maintained.

- User-Defined Integrity: This refers to custom rules defined by the user to meet their specific requirements. Based on their industries, businesses often have to specify rules that will improve their data integrity, accessibility, and reliability.

Most Common Threats to Data Integrity

The importance of data integrity is a topic that is being discussed widely at global forums and even at international business conventions. Let’s look at some of the major threats to business data and some best practices that could go a long way in helping you maintain your data integrity.

Cyber-attacks: Cyber-attacks are very common these days. Even highly secure systems are being hacked or being subjected to viral attacks. Compromised data integrity provides an open pathway for such cyber threats and it can be the weakest link in the system security. The standard practices for enhancing your data security include enabling multi-factor authentications, regularly updating software and applications, checking for network vulnerabilities, and strengthening configuration management.

Data Loss and Recovery: There is nothing worse than losing valuable data that has not yet been backed up. It is very important that business intelligence companies that handle large volumes of data on a regular basis have predefined backup frequencies and associated processes. Organizations must be very clear as to how frequently data must be backed up, in what format should it be recorded, where should it be stored, and who should be handling the process. Version control and recovery mechanisms should be devised in such a way that even if data is compromised, the damage is kept to minimal. Today, most data centers have auto backup and recovery options.

Lack of Data Protocols: One of the most common reasons why data integrity gets compromised is human error. When data is being handled by resources who do not have proper training and who don’t have any protocols to follow, the chances of data tampering is higher. The only way to circumvent this situation is to establish relevant protocols within the system. Protocols are basically rules to standardize data handling. Business processes should be supported by corresponding protocols to ensure they are enforced. For instance, a data transfer protocol that instructs an authorized team to transfer data between environments only using SDM ensures that there is minimal human error. Training and setting up a robust data engineering team can be time-consuming and expensive. Alternatively, you can partner with data analytics consulting firms to help you process your data with minimum risk.

Data Engineering at Expeed Software

At Expeed, we understand that achieving and maintaining data integrity is not a one-time affair. The concept in itself is constantly evolving and requires regular updating. As a data analytics company, we have adopted a culture that advocates strong security architecture, data privacy policies, regular checks on network vulnerabilities, and the like. It is the only way to shield data from possible breaches due to lapses in integrity. Our team offers a wide range of data engineering and analytics services including data modernization, business intelligence, advanced analytics, and AI.

Expeed Software is a global software company specializing in application development, data analytics, digital transformation services, and user experience solutions. As an organization, we have worked with some of the largest companies in the world, helping them build custom software products, automate processes, drive digital transformation, and become more data-driven enterprises. Our focus is on delivering products and solutions that enhance efficiency, reduce costs, and offer scalability.