The Arrival of the AI

If future historians were to look back to our time now, they would likely see two eras of human technology – the one before ChatGPT and the one after. We’re living at the cusp of what could potentially be the beginning of a new kind of man-machine collaboration. One that has been made possible by this little (or large) concept called LLMs or Large Language Models.

Although the foundation for LLMs was laid with the development of neural networks and deep learning techniques in the early 2010s, the real breakthrough came with the introduction of Transformer architecture in 2017. And, in roughly less than five years, data scientists have been able to create and perfect GPT (Generative Pre-trained Transformer) Models that leverage the expanding potentials of LLMs.

So how do AI models like GPT-4 and others work? How does LLM fit into all this? And most importantly, does LLM really hold the key to transforming mankind into an AI-assisted species?

We’ll probably need to wait and watch to find the answer to the last question (although it’s brimming with a potential Yes!). As for the first two, let’s find out.

Understanding LLMs in The World of AI

Ever since ChatGPT made its debut in November 2022, people have been left awestruck at its capabilities. Not only did it prove just how powerful Artificial Intelligence can be, but it also made LLMs the life of the party. A concept that until then was understood and discussed by data scientists and engineers became conversation starters at business meetings and dinner tables alike. All thanks to OpenAI which credited their GPT version improvements to LLMs.

So what are LLMs really?

By definition, they are a type of artificial intelligence that is trained on a massive dataset of text and code. They are a specific class of AI models designed for natural language understanding and generation tasks.

Before moving on, a quick refresh for those not quite familiar with AI. AI is broadly classified into two – Generative AI and Non-Generative AI. When you use AI technologies to create new data like texts, images, music, or other forms of creative content, it falls under Generative AI. On the other hand, an AI that is programmed to analyze existing data and make predictions or reports is called Non-Generative, as it does not create any new data.

So coming back to LLMs, these models are used for Generative AI functions that include tasks such as text generation, language translation, sentiment analysis, text summarization, question answering, and more.

Different Types of LLMs

Over the last decade, the advancements in Natural Language Processing (NLP) and deep learning have fuelled the creation of powerful LLMs, diversifying in specific applications and requirements. To understand them better, let’s look at the different types of LLMs that exist today and what they are commonly used for.

Natural Language Understanding (NLU): As the name would suggest, these LLMs are capable of deciphering the meaning of text and code. Most of the chat-based AI that you’ve interacted with is powered by NLU models that are exceptionally good at question answering, machine translation, text summarization, etc.

Natural Language Generation (NLG): These LLMs take question answering to the next level by creating text data. Basically, all the AI-generated news articles and blogs you’ve been reading about are the creative works of NLG-powered AI.

Machine Learning Research: The purpose of this LLM is to enable the development of new algorithms and techniques for natural language processing. They are used by researchers to explore uncharted AI territories that lead to breakthroughs benefitting fields like NLP, linguistics, and beyond.

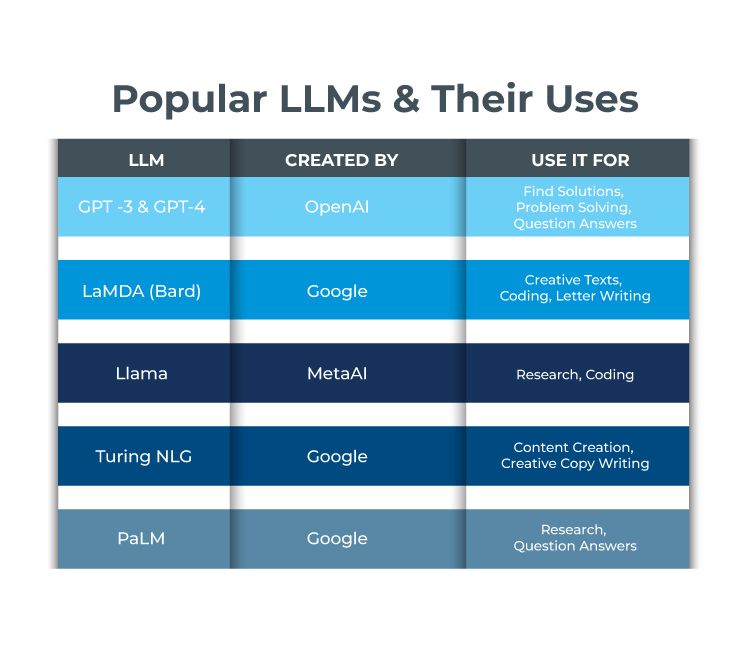

Learning to Leverage Today’s LLMs – Popular Platforms

Now that we understand the basic concepts of LLM, let’s look at the different types of LLMs available today and how they differ from each other.

GPT-3 and GPT-4

GPT-3 and 4 are without doubt the most powerful LLMs available today. Developed by OpenAI, these models have been trained on a massive dataset of text and code and have absorbed the nuances of human language beautifully. GPT-4 uses detailed information to answer complex questions, generate creative and marketable content, and offer coding solutions. The ability to pick up text data makes them particularly useful in language translation.

LaMDA

The Language Model for Dialog Applications, popularly known as LaMDA, is a Google AI model that forms the base for Google’s Bard – a solution much like chatGPT. Trained on a different dataset of text and code, Google has designed Bard to be more informative and comprehensive than GPT-3. This means that Bard is better at understanding the nuances of human language and generating text that is more likely to be relevant to users.

Llama

Much like OpenAI’s GPT models and Google’s LaMDA, Llama is also an LLM designed for versatile NLU and machine translation functions. But unlike the former, Llama sets itself apart with a fine-tuning prowess that allows it to adapt itself flexibly to a wide array of tasks. Designed by Meta AI, Llama stretches its limbs across various domains.

Turing NLG

Unlike the other platforms we just saw, Turing NLG was designed and developed by Google, especially for performing NLG functions. Hence the LLM can be used to generate text content for a variety of purposes, such as news articles, product descriptions, and marketing copy. Turing NLG can generate text that is both creative and informative.

PaLM

PaLM is also another LLM developed by Google AI but this time for machine learning research. While it can also be used for other tasks, including NLU and question answering, the core LLM training is focused on research. Although still under development, PaLM has already demonstrated its ability to perform many tasks that were previously thought to be impossible for machines.

As you can see, different LLMs have different strengths and weaknesses. So it is not a question of which one is better or more powerful. It is about finding the right LLM for your requirements. For example, if what you need is some creative text generation, then Turing NLG might be a good option. Alternatively, if you need an LLM that is good at understanding the nuances of human language, then you might want to choose Bard.

But what if you need an LLM to do complex data analysis specific to your business and then generate insights in simple human language?

As is always the case with technology, when you can’t make do with an existing version of a product, you customize it. With LLMs too, you can train them with your own dataset of texts and codes.

The Need for Custom Trained LLMs

If you’re serious about leveraging LLMs for your business, then it’s most likely that the off-the-shelf versions available today might not cut it. In this case, you want to train an LLM based on your own datasets. For example, industries like healthcare and banking cannot rely on generic LLMs to support their unique and complex requirements.

Here are some scenarios under which you need to train these pre-trained LLMs on your own data.

- When you need an industry-specific LLM.

- When you need to improve the accuracy of the LLM.

- When you need more creative or informative output.

Yet, embarking on the journey to train an LLM isn’t always a walk in the park. For starters, you must have access to a substantial dataset of text and code tailored to your specific domain. And even if you do have such access, you’ll need to spend a lot of time and plan it properly. And let’s not forget the investment of time and expensive servers with GPUs that training LLM demands.

Jumping into the fray without the right skills or a clear direction can lead to not just a steep learning curve but also a significant dent in your wallet.

Limitless Horizons with LLMs

The thing about an LLM that makes it so wonderfully brilliant is that it is always learning – constantly, consistently, and exponentially. Which means, we’ve barely scratched the surface of what it is truly capable of. As researchers and innovators continue to push the boundaries of these models, we can only imagine the incredible breakthroughs and transformations they will usher in across various domains.

If we were to put it in human terms, the LLMs we see today are like a child that has just pulled itself up on a couch. That’s where we are right now, celebrating like proud parents at the marvels of technology and AI, and eagerly awaiting its next milestones. And while it grows, it’s up to us to make sure that we’re fully prepared to solve any ethical concerns surrounding its misuse and put this powerful technology to good use, to improve healthcare, generate more clean energy, help businesses reduce their carbon footprint, and more.

At Expeed, our team of data scientists and engineers are passionate about experimenting and discovering more efficient LLMs. We have worked with some of the largest businesses in the world to create custom platforms that leverage LLMs to improve their sustainability impact scores. If you’d like to learn more about how LLMs can transform your business, get in touch with our team today!

Expeed Software is a global software company specializing in application development, data analytics, digital transformation services, and user experience solutions. As an organization, we have worked with some of the largest companies in the world, helping them build custom software products, automate processes, drive digital transformation, and become more data-driven enterprises. Our focus is on delivering products and solutions that enhance efficiency, reduce costs, and offer scalability.