Stay Ahead with Cutting-Edge Technology—Don’t Let Your Business Fall Behind

Technology evolves at a rapid pace, and if you can't keep up, you'll soon fall behind, which means:

Losing Customers and Market Presence

Failing to adopt the latest technologies can make your company outdated, allowing competitors to outpace you.

Missing Growth Opportunities

Relying on outdated processes and repeating past mistakes means missing out on advancements that can drive meaningful growth and innovation.

Investing in Technology with No ROI

Investing in technologies that fail to deliver ROI drains your resources and limits your ability to focus on strategies that truly add value.

End-to-End Technology Solutions For Digital Excellence

We specialize in building future-ready solutions that drive business success. Our expertise spans web and mobile app development, data analytics consulting, AI consulting, digital transformation, and IoT-based applications, ensuring your business makes use of the latest technologies for sustained success. With a strong emphasis on innovation and adaptability, we help businesses scale, adapt, and maintain a competitive edge in a rapidly evolving market.

"We don’t just deliver technology—we create possibilities that drive real business impact."

Future-Proofing Your Business in 3 Simple Steps

Schedule a Consultation

Book a time with our experts to discuss your challenges, goals, and vision.

We take the time to truly understand your unique challenges, crafting a tailored roadmap that aligns with your business goals and vision. With our deep expertise in technology solutions, we help unlock new opportunities that unfurl the true potential of your business.

Determine the Right Solution

Turn your vision into a scalable strategy that defines the best-fit solution.

We collaborate to refine your ideas and develop technology-driven solutions that position your business for long-term success. Our goal is to ensure your requirements are met to the highest standard, align seamlessly with industry best practices, and provide maximum business value.

Achieve Your Goals

Launch with confidence with a market-ready solution tailored to your needs.

Our strategic approach ensures that your solution delivers lasting impact and is equipped to scale with future opportunities. We offer constant support and forward-thinking strategies, ensuring the solution remains optimized with evolving business needs.

Here is How Our Advanced Technology Solutions Elevate Your Business to Innovate, Scale, and Succeed

We aim to accelerate your journey toward success by empowering you with the perks of next-gen, futuristic technologies. Unfold our success stories to learn how we work!

UTILITY & ENERGY

Energy Usage Analytics Platform

We developed a comprehensive platform capable of turning out millions of energy usage data points into actionable insights for a prominent energy management company.

Improvement in operational accuracy

Enhancement in customer satisfaction

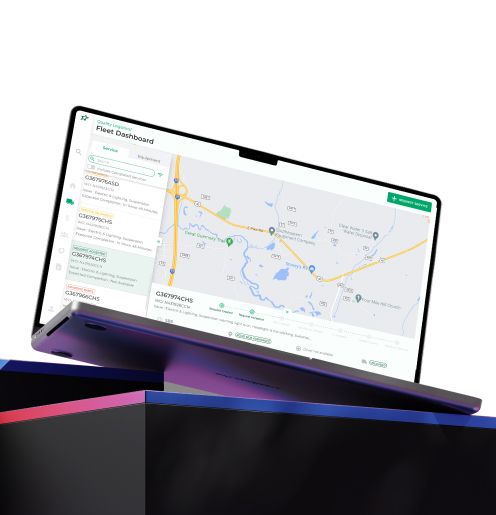

LOGISTICS

Service-Oriented UX Revamp for Logistics Efficiency

We revamped our logistics client’s application with a focus on improved accessibility to time-critical features and heuristics for more efficient decision-making. By prioritizing user experience, we delivered a solution that simplified navigation, improved design consistency, and enhanced efficiency.

Reduction in user navigation time across the app

Decrease in UI clutter

Improvement in design consistency

IOT

Custom Analytics Platform Using IoT Data Sources

The AI-enabled platform connects effortlessly with IoT ecosystems, providing customizable dashboards and real-time analytics for quick, data-driven findings.

Reduction in time spent configuring analytical dashboards

Faster anomaly detection for IoT devices

Increase in operational efficiency

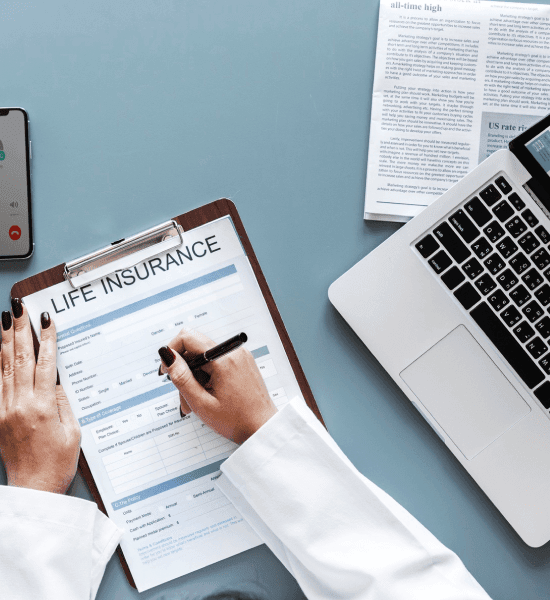

INSURANCE

Health Insurance Claim Management and Fraud Detection Analysis

We collaborated with a leading insurance provider to develop an advanced data analytics platform. This platform was intended to save costs by detecting fraudulent claims and managing health insurances in an efficient and hassle-free manner.

Improvement in accuracy

Reduction in fraudulent claims

AI-BASED APPLICATION

AI-Driven Recruitment Software

Expeed’s AI-driven recruitment platform revolutionizes hiring processes by automating tasks such as resume screening, candidate scoring, and interview evaluation. Built on CrewAI’s agent-based framework, the tool simplifies recruitment workflows, ensuring scalability, precision, and efficiency.

Reduction in manual resume screening tasks

Faster candidate evaluation process

Serving a diverse clientele of satisfied customers, we invite you to explore more of our success stories and discover what sets us apart as a top custom software development company.

Discover Your

Customized Roadmap

to Success

Get the right tech solution with Expeed's Jumpstart Program. Share your problem and some basic details about your company. Get a personalized PDF guide with tailored solutions.

What You Receive

★Personalized Insights: Get an in-depth analysis based on the distinct challenges faced by your business.

★Adept Recommendations: Witness how Expeed's expertise in tailored digital solutions converts recommendations into actionable steps in line with your business plans.

★Convenience and Speed: Obtain a professional review in no time, which will help you understand the possible solutions without obligation.

★Strategic Advantage: Gain the insights needed to stay ahead when planning your technology strategy.

Learning Center

Simplifying the Latest Technology Trends... One Blog at a Time

Lead with Innovation, Be the Business Your Competitors Admire

In today's fast-moving world of technological advancement, your business should stand out. As one of the best custom software development companies that design cutting-edge technology solutions, we propel your business to the forefront of the industry. We are focused on empowering you not only to meet present challenges but also to predict future trends. We ensure that your business stays a leader and an innovator in the market.